Hao Chen

Email: haoc3 [at] andrew.cmu.edu

| [Google scholar] | [Github] | [Twitter/X] | [Linkedin] |

About Me

I’m a 4th-year PhD candidate at Carnegie Mellon University (CMU), where I am advised by Prof. Bhiksha Raj. I earned my Master’s degree in Electrical and Computer Engineering from CMU and my Bachelor’s degree from the University of Edinburgh, where I had the privilege of being supervised by Prof. Sotirios Tsaftaris and Tianjin University. I’ve also been fortunate to have the opportunity to collaborate with Prof. Jindong Wang along the way.

Research Interests

My research focuses on building more reliable and adaptable foundation models in realistic scenarios using data-centric methods:

- Exploring Pre-Training Data Imperfections: Investigate the effects of different types of data imperfections (e.g., corruption, bias, diversity) during pre-training and how they influence the physics of foundation models.

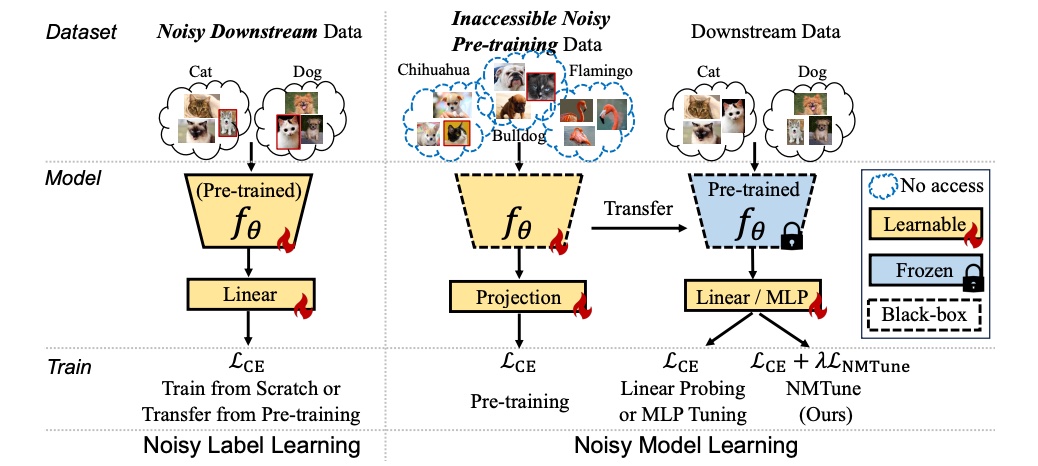

- Understanding and Mitigating Catastrophic Inheritance: Examine how imperfections in pre-training data impact downstream tasks, and develop fine-tuning methods to mitigate the detrimental effects, thereby improving reliability and generalization.

- Leveraging Imperfect Data and Labels for Transfer Learning: Develop robust learning methods to handle various types of imperfect data and labels for effective downstream task adaptation.

- Understanding Multi-Modality Generalization from Individual Components: Advancing multi-modality foundation models by understanding their individual components from training data.

I’m open to collaboration on relevant topics. I’m happy to mentor students in research and particularly encourage those from underrepresented groups to reach out.

News

| Feb 05, 2025 | We will be holding a tutorial of Unified Semi-Supervised Learning with Foundation Models at AAAI 25. See you at Philadelphia! |

|---|---|

| Feb 05, 2025 | One paper has been accepted by ICLR 2024. |

| Oct 07, 2024 | Three papers have been accepted by NeurIPS 2024, including a spotlight |

| Sep 02, 2024 | Our vision paper on Catastrophic Inheritance is accepted by DMLR 2024. |

Selected Publications

-

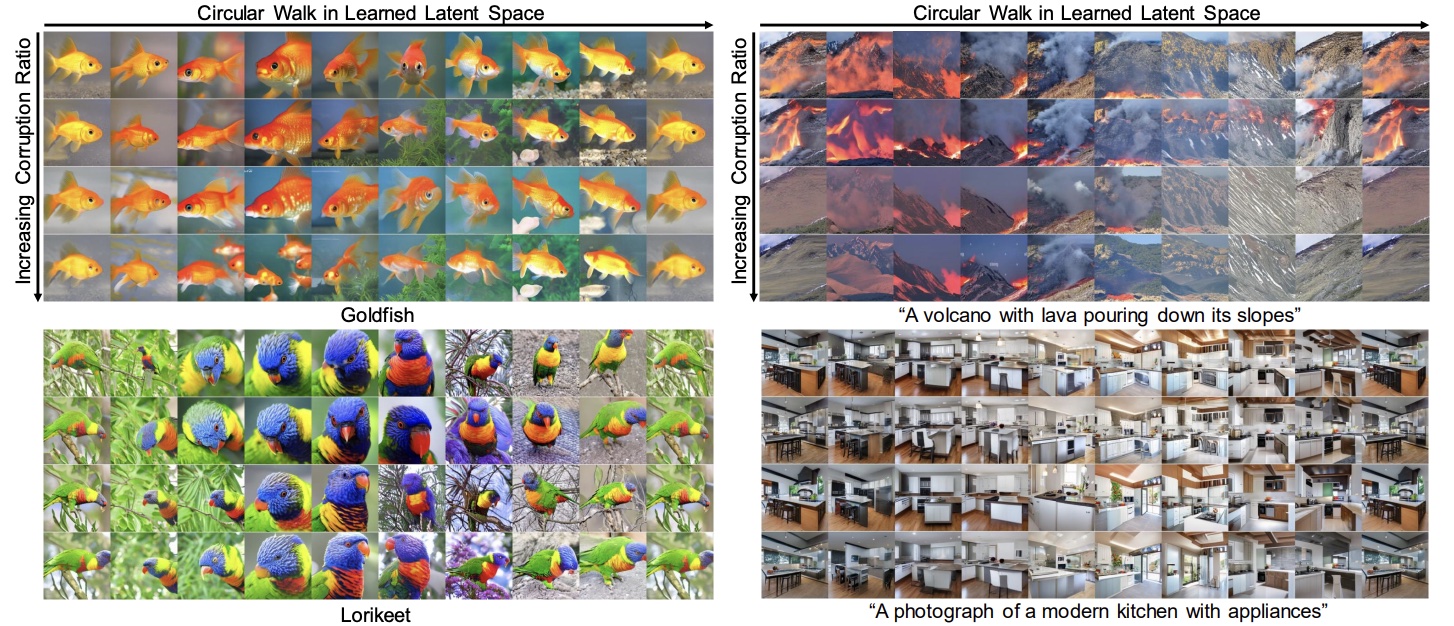

Slight Corruption in Pre-training Data Makes Better Diffusion ModelsIn Neural Information Processing Systems (NeurIPS), Spotlight , 2024

Slight Corruption in Pre-training Data Makes Better Diffusion ModelsIn Neural Information Processing Systems (NeurIPS), Spotlight , 2024 -

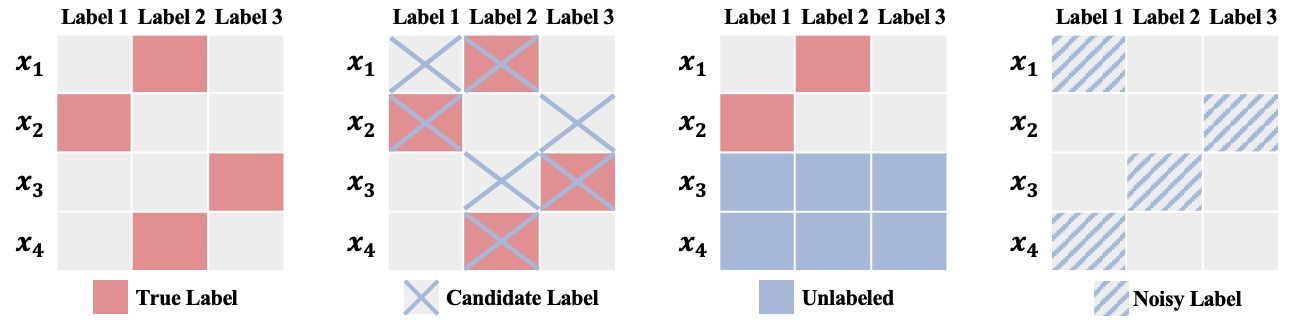

Imprecise Label Learning: A Unified Framework for Learning with Various Imprecise Label ConfigurationsIn Neural Information Processing Systems (NeurIPS) , 2024

Imprecise Label Learning: A Unified Framework for Learning with Various Imprecise Label ConfigurationsIn Neural Information Processing Systems (NeurIPS) , 2024 -

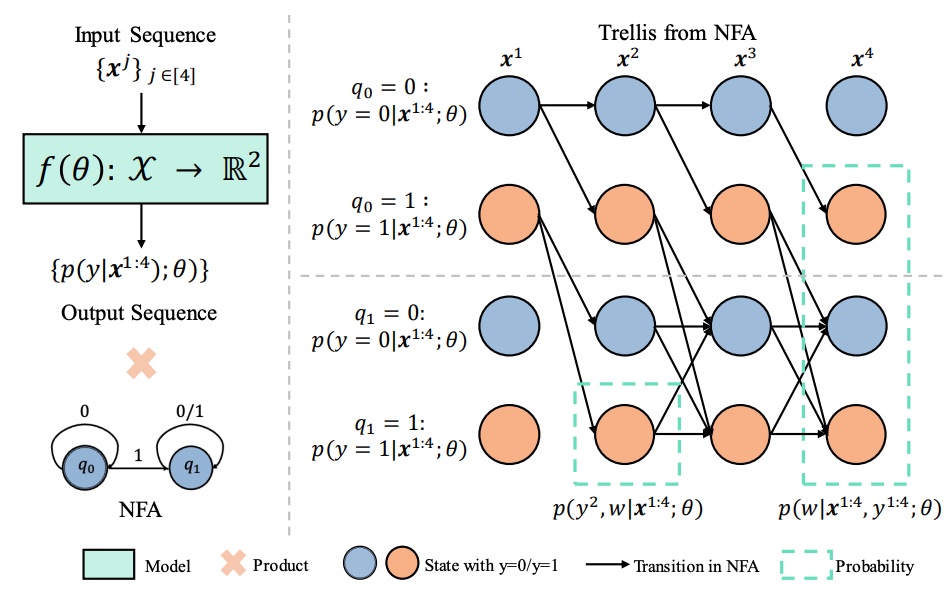

A General Framework for Learning from Weak SupervisionIn International Conference on Machine Learning (ICML) , 2024

A General Framework for Learning from Weak SupervisionIn International Conference on Machine Learning (ICML) , 2024 -

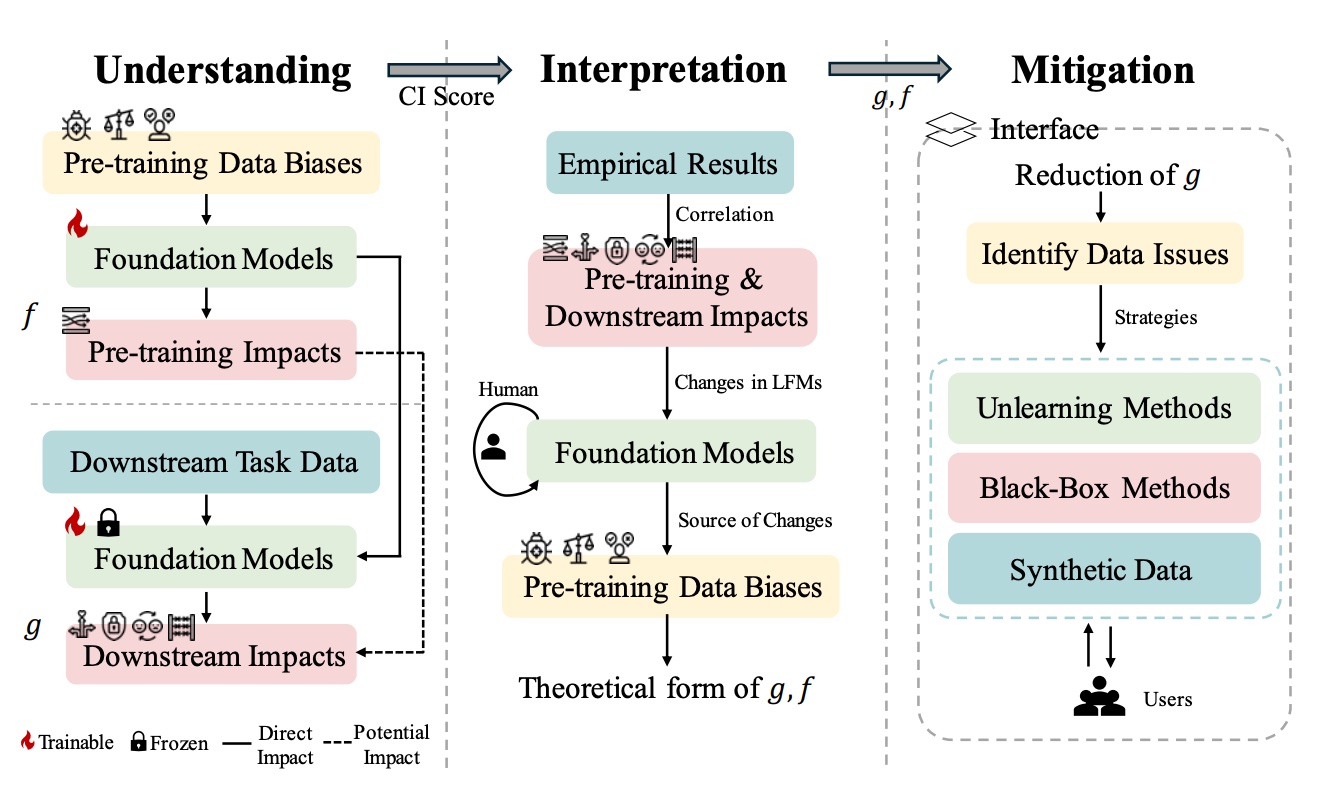

On Catastrophic Inheritance of Large Foundation ModelsJournal of Data-centric Machine Learning Research (DMLR), 2024

On Catastrophic Inheritance of Large Foundation ModelsJournal of Data-centric Machine Learning Research (DMLR), 2024 -

Understanding and Mitigating the Label Noise in Pre-training on Downstream TasksIn International Conference on Learning Representations (ICLR), Spotlight , 2024

Understanding and Mitigating the Label Noise in Pre-training on Downstream TasksIn International Conference on Learning Representations (ICLR), Spotlight , 2024